One of the leading companies offering alternatives to lithium batteries for the grid just got a from the US Department of Energy. Eos Energy makes zinc-halide batteries, which the firm hopes could one day be used to store renewable energy at a lower cost than is possible with existing lithium-ion batteries. The loan is the first “conditional commitment” from the DOE’s Loan Program Office to a battery maker focused on alternatives to lithium-ion cells. The agency has previously funded , , and other climate technologies like Today, lithium-ion batteries are the default choice to store energy in devices from laptops to electric vehicles. The cost of these kinds of batteries has , but there’s a growing need for even cheaper options. Solar panels and wind turbines only produce energy intermittently, and to keep an electrical grid powered by these renewable sources humming around the clock, grid operators need ways to store that energy until it is needed. The US grid alone may need between 225 and 460 gigawatts of long-duration energy storage capacity . New batteries, like the zinc-based technology Eos hopes to commercialize, could store electricity for hours or even days at low cost. These and other alternative storage systems could be key to building a consistent supply of electricity for the grid and cutting the climate impacts of power generation around the world. In Eos’s batteries, the cathode is not made from the familiar mixture of lithium and other metals. Instead, the primary ingredient is zinc, which ranks as the . Zinc-based batteries aren’t a new invention—researchers at Exxon patented zinc-bromine flow batteries in the 1970s—but Eos has developed and altered the technology over the last decade. Zinc-halide batteries have a few potential benefits over lithium-ion options, says , vice president of research and development at Eos. “It’s a fundamentally different way to design a battery, really, from the ground up,” he says. Eos’s batteries use a water-based electrolyte (the liquid that moves charge around in a battery) instead of organic solvent, which makes them more stable and means they won’t catch fire, Richey says. The company’s batteries are also designed to have a longer lifetime than lithium-ion cells—about 20 years as opposed to 10 to 15—and don’t require as many safety measures, like active temperature control. There are some technical challenges that zinc-based and other alternative batteries will need to overcome to make it to the grid, says , technical principal at Volta Energy Technologies, a venture capital firm focused on energy storage technology. Zinc batteries have a relatively low efficiency—meaning more energy will be lost during charging and discharging than happens in lithium-ion cells. Zinc-halide batteries can also fall victim to unwanted chemical reactions that may shorten the batteries’ lifetime if they’re not managed. Those technical challenges are largely addressable, Rodby says. The bigger challenge for Eos and other makers of alternative batteries will be manufacturing at large scales and cutting costs down. “That’s what’s challenging here,” she says. “You have by definition a low-cost product and a low-cost market.” Batteries for grid storage need to get cheap quickly, and one of the major pathways is to make a lot of them. Eos currently operates a semi-automated factory in Pennsylvania with a maximum production of about 540 megawatt-hours annually (if those were lithium-ion batteries, it would be enough to power about 7,000 average US electric vehicles), though the facility doesn’t currently produce at its full capacity. The loan from the DOE is “big news,” says Eos CFO . The company has been working on securing the funding for two years, and it will give the company “much-needed capital” to build its manufacturing capacity. Funding from the DOE will support up to four additional, fully automated lines in the existing factory. Altogether, the four lines could produce eight gigawatt-hours’ worth of batteries annually by 2026—enough to meet the daily needs of up to 130,000 homes. The DOE loan is a conditional commitment, and Eos will need to tick a few boxes to receive the funding. That includes reaching technical, commercial, and financial milestones, Kroeker says. Many alternative battery chemistries have struggled to transition from working samples in the lab and small manufacturing runs to large-scale commercial production. Not only that, but issues securing funding and problems lining up buyers have taken down in just the past decade. It can be difficult to bring alternatives to the market in energy storage, Kroeker says, though he sees this as the right time for new battery chemistries to make a dent. As renewables are rushing onto the grid, there’s a much higher need for large-scale energy storage than there was a decade ago. There’s also new support in place, like that make the business case for new batteries more favorable. “I think we’ve got a once-in-a-generation opportunity now to make a game-changing impact in our energy transition,” he says.

This is today’s edition of , our weekday newsletter that provides a daily dose of what’s going on in the world of technology. You need to talk to your kid about AI. Here are 6 things you should say. In the past year, kids, teachers, and parents have had a crash course in artificial intelligence, thanks to the wildly popular AI chatbot ChatGPT. In a knee-jerk reaction, some schools banned the technology—only to cancel the ban months later. Now that many adults have caught up with what ChatGPT is, schools have started exploring ways to use AI systems to teach kids important lessons on critical thinking. At the start of the new school year, here are MIT Technology Review’s six essential tips for how to get started on giving your kid an AI education. . —Rhiannon Williams & Melissa Heikkilä My colleague Will Douglas Heaven wrote about how AI can be used in schools for our recent Education issue. You can read that piece . Chinese AI chatbots want to be your emotional support Last week, Baidu became the first Chinese company to roll out its large language model—called Ernie Bot—to the general public, following regulatory approval from the Chinese government.Since then, four more Chinese companies have also made their LLM chatbot products broadly available, while more experienced players, like Alibaba and iFlytek, are still waiting for the clearance.One thing that Zeyi Yang, our China reporter, noticed was how the Chinese AI bots are used to offer emotional support compared to their Western counterparts. Given that chatbots are a novelty right now, it raises questions about how the companies are hoping to keep users engaged once that initial excitement has worn off. . This story originally appeared in China Report, Zeyi’s weekly newsletter giving you the inside track on all things happening in tech in China. to receive it in your inbox every Tuesday. The must-reads I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology. 1 China’s chips are far more advanced than we realizedHuawei’s latest phone has US officials wondering how effective their sanctions have really been. ( $)+ It suggests China’s domestic chip tech is coming on in leaps and bounds. ()+ Japan was once a chipmaking giant. What happened? ( $) + The US-China chip war is still escalating. () 2 Meta’s AI teams are in turmoil Internal groups are scrapping over the company’s computing resources. ( $)+ Meta’s latest AI model is free for all. () 3 Conspiracy theorists have rounded on digital cash If authorities can’t counter those claims, digital currencies are dead in the water. ( $)+ Is the digital dollar dead? ()+ What’s next for China’s digital yuan? () 4 Lawyers are the real winners of the crypto crashSomeone has to represent all those bankrupt companies. ( $)+ Sam Bankman-Fried is adjusting to life behind bars ( $) 5 Renting an EV is a minefieldCollecting a hire car that’s only half charged is far from ideal. ( $)+ BYD, China’s biggest EV company, is eyeing an overseas expansion. ()+ How new batteries could help your EV charge faster. () 6 US immigration used fake social media profiles to spy on targetsEven though aliases are against many platforms’ terms of service. () 7 The internet has normalized laughing at death The creepy groups are a digital symbol of human cruelty. ( $) 8 New York is purging thousands of Airbnbs A new law has made it essentially impossible for the company to operate in the city. ( $)+ And hosts are far from happy about it. ( $) 9 Men are already rating AI-generated women’s hotnessIn another bleak demonstration of how AI models can perpetuate harmful stereotypes. ()+ Ads for AI sex workers are rife across social media. () 10 Meet the young activists fighting for kids’ rights onlineThey’re demanding a say in the rules that affect their lives. ( $) Quote of the day “It wasn’t totally crazy. It was only moderately crazy.” —Ilya Sutskever, co-founder of OpenAI, reflects on the company’s early desire to chase the theoretical goal of artificial general intelligence, according to . The big story Marseille’s battle against the surveillance state June 2022Across the world, video cameras have become an accepted feature of urban life. Many cities in China now have dense networks of them, and London and New Delhi aren’t far behind. Now France is playing catch-up. Concerns have been raised throughout the country. But the surveillance rollout has met special resistance in Marseille, France’s second-biggest city. It’s unsurprising, perhaps, that activists are fighting back against the cameras, highlighting the surveillance system’s overreach and underperformance. But are they succeeding? . —Fleur Macdonald We can still have nice things A place for comfort, fun and distraction in these weird times. (Got any ideas? Drop me a line or .) + How’d ya like dem ? Quite a lot, actually.+ Why keeping isn’t as crazy as it sounds.+ There’s no single explanation for why we get .+ This couldn’t be cuter.+ Here’s how to get your steak .

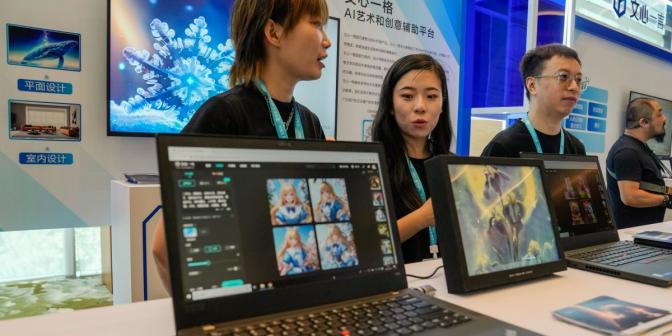

This story first appeared in China Report, MIT Technology Review’s newsletter about technology developments in China. to receive it in your inbox every Tuesday. Chinese ChatGPT-like bots are having a moment right now. As I last week, Baidu became the first Chinese tech company to roll out its large language model—called Ernie Bot—to the general public, following a regulatory approval from the Chinese government. Previously, access required an application or was limited to corporate clients. You can read more about the news . I have to admit the Chinese public has reacted more passionately than I had expected. According to Baidu, the Ernie Bot mobile app reached 1 million users in the 19 hours following the announcement, and the model responded to more than 33.42 million user questions in 24 hours, averaging 23,000 questions per minute. Since then, four more Chinese companies—the facial-recognition giant SenseTime and three young startups, Zhipu AI, Baichuan AI, and MiniMax—have also made their LLM chatbot products broadly available. But some more experienced players, like Alibaba and iFlytek, are still waiting for the clearance. Like many others, I downloaded the Ernie Bot app last week to try it out. I was curious to find out how it’s different from its predecessors like ChatGPT. What I noticed first was that Ernie Bot does a lot more hand-holding. Unlike ChatGPT’s public app or website, which is essentially just a chat box, Baidu’s app has a lot more features that are designed to onboard and engage new users. Under Ernie Bot’s chat box, there’s an endless list of prompt suggestions—like “Come up with a name for a baby” and “Generating a work report.” There’s another tab called “Discovery” that displays over 190 pre-selected topics, including gamified challenges (“Convince the AI boss to raise my salary”) and customized chatting scenarios (“Compliment me”). It seems to me that a major challenge for Chinese AI companies is that now, with government approval to open up to the public, they actually need to earn users and keep them interested. To many people, chatbots are a novelty right now. But that novelty will eventually wear off, and the apps need to make sure people have other reasons to stay. One clever thing Baidu has done is to include a tab for user-generated content in the app. In the community forum, I can see the questions other users have asked the app, as well as the text and image responses they got. Some of them are on point and fun, while others are way off base, but I can see how this inspires users to try to input prompts themselves and work to improve the answers. Left: a successful generation from the prompt “Pikachu wearing sunglasses and smoking cigars.” Right: the Ernie Bot failed to generate an image reflecting the literal or figurative meaning of 狗尾续貂, “To join a dog’s tail to a sable coat,” which is a Chinese idiom for a disappointing sequel to a fine work. Another feature that caught my attention was Ernie Bot’s efforts to introduce role-playing. One of the top categories on the “Discovery” page asks the chatbot to respond in the voice of pre-trained personas including Chinese historical figures like the ancient emperor Qin Shi Huang, living celebrities like Elon Musk, anime characters, and imaginary romantic partners. (I asked the Musk bot who it is; it answered: “I am Elon Musk, a passionate, focused, action-oriented, workaholic, dream-chaser, irritable, arrogant, harsh, stubborn, intelligent, emotionless, highly goal-oriented, highly stress-resistant, and quick-learner person.” I have to say they do not seem to be very well trained; “Qin Shi Huang” and “Elon Musk” both broke character very quickly when I asked them to comment on serious matters like the state of AI development in China. They just gave me bland, Wikipedia-style answers. But the most popular persona—already used by over 140,000 people, according to the app—is called “the considerate elder sister.” When I asked “her” what her persona is like, she answered that she’s gentle, mature, and good at listening to others. When I then asked who trained her persona, she responded that she was trained by “a group of professional psychology experts and artificial-intelligence developers” and “based on analysis of a large amount of language and emotional data.” “I won’t answer a question in a robotic way like ordinary AIs, but I will give you more considerate support by genuinely caring about your life and emotional needs,” she also told me. I’ve noticed that Chinese AI companies have a particular fondness for emotional-support AI. Xiaoice, one of the first Chinese AI assistants, made its name by . And another startup, Timedomain, when it shut down its AI boyfriend voice service. Baidu seems to be setting up Ernie Bot for the same kind of use. I’ll be watching this slice of the chatbot space grow with equal parts intrigue and anxiety. To me, it’s one of the most interesting possibilities for AI chatbots. But this is more challenging than writing code or answering math problems; it’s an entirely different task to ask them to provide emotional support, act like humans, and stay in character all the time. And if the companies do pull it off, there will be more risks to consider: What happens when humans actually build deep emotional connections with the AI? Would you ever want emotional support from an AI chatbot? Tell me your thoughts at zeyi@technologyreview.com. Catch up with China 1. The mysterious advanced chip in Huawei’s newly released smartphone has sparked many questions and much speculation about China’s progress in chip-making technology. () 2. Meta took down the largest Chinese social media influence campaign to date, which included over 7,000 Facebook accounts that bashed the US and other adversaries of China. Like its predecessors, the campaign failed to attract attention. () 3. Lawmakers across the US are concerned about the idea of China buying American farmland for espionage, but actual land purchase data from 2022 shows that very few deals were made by Chinese entities. () 4. A Chinese government official was sentenced to life in prison on charges of corruption, including fabricating a Bitcoin mining company’s electricity consumption data. () 5. Terry Gou, the billionaire founder of Foxconn, is running as an independent candidate in Taiwan’s 2024 presidential election. () 6. The average Chinese citizen’s life span is now 2.2 years longer thanks to the efforts in the past decade to clean up air pollution. () 7. Sinopec, the major Chinese oil company, predicts that gasoline demand in China will peak in 2023 because of the surging demand for electric vehicles. () 8. Chinese sextortion scammers are flooding Twitter comment sections and making the site almost unusable for Chinese speakers. () Lost in translation The favorite influencer of Chinese grandmas just got banned from social media. “Xiucai,” a 39-year-old man from Maozhou city, posted hundreds of videos on Douyin where he acts shy in China’s countryside, subtly flirts with the camera, and lip-synchs old songs. While the younger generations find these videos cringe-worthy, his look and style amassed him a large following among middle-aged and senior women. He attracted over 12 million followers in just over two years, over 70% of whom were female and nearly half older than 50. In May, a 72-year-old fan took a 1,000-mile solo train ride to Xiucai’s hometown just so she could meet him in real life. But last week, his account was suddenly banned from Douyin, which said Xiucai had violated some platform rules. Local taxation authorities in Maozhou said he was reported for tax evasion, but the investigation hasn’t concluded yet, . His disappearance made more young social media users aware of his cultish popularity. As those in China’s silver generation learn to use social media and even become addicted to it, they have also become a lucrative target for content creators. One more thing Forget about bubble tea. The trendiest drink in China this week is a latte mixed with baijiu, the potent Chinese liquor. the eccentric invention is a collaboration between Luckin Coffee, China’s largest cafe chain, and Kweichow Moutai, China’s most famous liquor brand. News of its release lit up Chinese social media because it sounds like an absolute abomination, but the very absurdity of the idea makes people want to know what it actually tastes like. Dear readers in China, if you’ve tried it, can you let me know what it was like? I need to know, for research reasons.

In the past year, kids, teachers, and parents have had a crash course in artificial intelligence, thanks to the wildly popular AI chatbot ChatGPT. In a knee-jerk reaction, some schools, such as the New York City public schools, banned the technology—only to months later. Now that many adults have caught up with the technology, schools have to use AI systems to teach kids important lessons on critical thinking. But it’s not just AI chatbots that kids are encountering in schools and in their daily lives. AI is increasingly everywhere—recommending shows to us on Netflix, helping Alexa answer our questions, powering your favorite interactive Snapchat filters and the way you unlock your smartphone. While some students will invariably be more interested in AI than others, understanding the fundamentals of how these systems work is becoming a basic form of literacy—something everyone who finishes high school should know, says Regina Barzilay, a professor at MIT and a faculty lead for AI at the MIT Jameel Clinic. The clinic recently ran a summer program for 51 high school students interested in the use of AI in health care. Kids should be encouraged to be curious about the systems that play an increasingly prevalent role in our lives, she says. “Moving forward, it could create humongous disparities if only people who go to university and study data science and computer science understand how it works,” she adds. At the start of the new school year, here are MIT Technology Review’s six essential tips for how to get started on giving your kid an AI education. 1. Don’t forget: AI is not your friend Chatbots are built to do exactly that: chat. The friendly, conversational tone ChatGPT adopts when answering questions can make it easy for pupils to forget that they’re interacting with an AI system, not a trusted confidante. This could make people more likely to believe what these chatbots say, instead of treating their suggestions with skepticism. While chatbots are very good at sounding like a sympathetic human, they’re merely mimicking human speech from data scraped off the internet, says Helen Crompton, a professor at Old Dominion University who specializes in digital innovation in education. “We need to remind children , because it’s all going into a large database,” she says. Once your data is in the database, it becomes . It could be used to make technology companies more money without your consent, or it could even be extracted by hackers. 2. AI models are not replacements for search engines Large language models are only as good as the data they’ve been trained on. That means that while chatbots are adept at confidently answering questions with text that may seem plausible, not all the information they offer up will be . AI language models are also known to present falsehoods as facts. And depending on where that data was collected, they can perpetuate . Students should treat chatbots’ answers as they should any kind of information they encounter on the internet: critically. “These tools are not representative of everybody—what they tell us is based on what they’ve been trained on. Not everybody is on the internet, so they won’t be reflected,” says Victor Lee, an associate professor at Stanford Graduate School of Education who has created for high school curriculums. “Students should pause and reflect before we click, share, or repost and be more critical of what we’re seeing and believing, because a lot of it could be fake.”While it may be tempting to rely on chatbots to answer queries, they’re not a replacement for Google or other search engines, says David Smith, a professor of bioscience education at Sheffield Hallam University in the UK, who’s been preparing to help his students navigate the uses of AI in their own learning. Students shouldn’t accept everything large language models say as an undisputed fact, he says, adding: “Whatever answer it gives you, you’re going to have to check it.” 3. Teachers might accuse you of using an AI when you haven’t One of the biggest challenges for teachers now that generative AI has reached the masses is working out when students have used AI to write their assignments. While plenty of companies have launched products that promise to has been written by a human or a machine, the problem is that , and it’s . There have been of cases where teachers assume an essay has been generated by AI when it actually hasn’t. Familiarizing yourself with your child’s school’s AI policies or AI disclosure processes (if any) and reminding your child of the importance of abiding by them is an important step, says Lee. If your child has been wrongly accused of using AI in an assignment, remember to stay calm, says Crompton. Don’t be afraid to challenge the decision and ask how it was made, and feel free to point to the record ChatGPT keeps of an individual user’s conversations if you need to prove your child didn’t lift material directly, she adds. 4. Recommender systems are designed to get you hooked and might show you bad stuff It’s important to understand and explain to kids how recommendation algorithms work, says Teemu Roos, a computer science professor at the University of Helsinki, who is developing a curriculum on AI for Finnish schools. Tech companies make money when people watch ads on their platforms. That’s why they have developed powerful AI algorithms that recommend content, such as videos on YouTube or TikTok, so that people will get hooked and to stay on the platform for as long as possible. The algorithms track and closely measure what kinds of videos people watch, and then recommend similar videos. The more cat videos you watch, for example, the more likely the algorithm is to think you will want to see more cat videos. These services have a tendency to guide users to harmful content like misinformation, Roos adds. This is because people tend to linger on content that is weird or shocking, such as misinformation about health, or extreme political ideologies. It’s very easy to get sent down a rabbit hole or stuck in a loop, so it’s a good idea not to believe everything you see online. You should double-check information from other reliable sources too. 5. Remember to use AI safely and responsibly Generative AI isn’t just limited to text: there are plenty of free apps and web programs that can impose someone’s face onto someone else’s body within seconds. While today’s students are likely to have been warned about the dangers of sharing intimate images online, they should be equally wary of uploading friends’ faces into —particularly because this could have legal repercussions. For example, courts have found teens guilty of spreading child pornography for sending explicit material about other teens or even . “We have conversations with kids about responsible online behavior, both for their own safety and also to not harass, or doxx, or catfish anyone else, but we should also remind them of their own responsibilities,” says Lee. “Just as nasty rumors spread, you can imagine what happens when someone starts to circulate a fake image.” It also helps to provide children and teenagers with specific examples of the privacy or legal risks of using the internet rather than trying to talk to them about sweeping rules or guidelines, Lee points out. For instance, talking them through how AI face-editing apps could retain the pictures they upload, or pointing them to news stories about platforms being hacked, can make a bigger impression than general warnings to “be careful about your privacy,” he says. 6. Don’t miss out on what AI’s actually good at It’s not all doom and gloom, though. While many early discussions around AI in the classroom revolved around its potential as a , when it’s used intelligently, it can be an enormously helpful tool. Students who find themselves struggling to understand a tricky topic could ask ChatGPT to break it down for them step by step, or to rephrase it as a rap, or to take on the persona of an expert biology teacher to allow them to test their own knowledge. It’s also exceptionally good at quickly drawing up detailed tables to compare the relative pros and cons of certain colleges, for example, which would otherwise take hours to research and compile. Asking a chatbot for glossaries of difficult words, or to practice history questions ahead of a quiz, or to help a student evaluate answers after writing them, are other beneficial uses, points out Crompton. “So long as you remember the bias, the tendency toward , and the importance of digital literacy—if a student is using it in the right way, that’s great,” she says. “We’re just all figuring it out as we go.”

This is today’s edition of , our weekday newsletter that provides a daily dose of what’s going on in the world of technology. Coming soon: MIT Technology Review’s 15 Climate Tech Companies to Watch For decades, MIT Technology Review has published annual lists highlighting the advances redefining what technology can do and the brightest minds pushing their fields forward. This year, we’re launching a new list, recognizing companies making progress on one of society’s most pressing challenges: climate change. MIT Technology Review’s 15 Climate Tech Companies to Watch will highlight the startups and established businesses that our editors think could have the greatest potential to address the threats of global warming. And attendees of our upcoming ClimateTech conference will be the first to find out. . —James Temple ClimateTech is taking place at the MIT Media Lab on MIT’s campus in Cambridge, Massachusetts, on October 4-5. You can register for the event, either in-person or online, . We know remarkably little about how AI language models work AI language models are not humans, and yet we evaluate them as if they were, using tests like the bar exam or the United States Medical Licensing Examination. The models tend to do really well in these exams, probably because examples of such exams are abundant in the models’ training data. Now, a growing number of experts have called for these tests to be ditched, saying they boost AI hype and fuel the illusion that such AI models appear more capable than they actually are. These discussions (raised last week) highlight just how little we know about how AI language models work and why they generate the things they do—and why our tendency to anthropomorphize can be problematic. . Melissa’s story first appeared in The Algorithm, her weekly newsletter giving you the inside track on all things AI. to receive it in your inbox every Monday. The must-reads I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology. 1 Elon Musk is suing the Anti-Defamation LeagueHe claims the organization is trying to kill X, blaming it for a 60% drop in advertising revenue. ()+ The ADL has tracked a rise in hate speech on X since Musk took over. ( $) 2 China is creating a state-backing chip fundIt’s part of the country’s plan to sidestep increasingly harsh sanctions from the US. ()+ The outlook for China’s economy isn’t too rosy right now. ( $)+ The US-China chip war is still escalating. () 3 India’s lunar mission is officially completeIts rover and lander has shut down—for now. ( $+ The rover even managed a small hop before entering sleep mode. ()+ What’s next for the moon. () 4 ‘Miracle cancer cures’ don’t come cheapThe high costs of personalized medicine mean many of the most vulnerable patients are priced out of life-saving treatment. ( $)+ Two sick children and a $1.5 million bill: One family’s race for a gene therapy cure. () 5 Investors are losing faith in SequoiaThe venture capital firm’s major shakeup has raised a lot of questions about its future. ( $) 6 Record numbers of Pakistan’s tech workers are leaving the countryTalented engineers are seeking new opportunities, away from home. () 7 Video games are becoming gentlerA new wave of gamers want to be soothed, not overstimulated. ( $) 8 Spotify’s podcasting empire is crumblingThe majority of its shows aren’t profitable, and competition is fierce. ( $)+ Bad news for white noise podcasts: ad payouts are being stopped. () 9 Who is tradwife content for, really?The young influencers espousing traditional family values are unlikely to do so forever. ( $) 10 AI wants to help us talk to the animals Wildlife is under threat. Trying to communicate with other species could help us protect them. ( $) Quote of the day “It’s the end of the month versus the end of the world.” —Nicolas Miailhe, co-founder of think tank the Future Society, points out the extreme disparity between camps of AI experts who can’t agree over how big a threat AI poses to humanity to . The big story The quest to learn if our brain’s mutations affect mental health August 2021Scientists have struggled in their search for specific genes behind most brain disorders, including autism and Alzheimer’s disease. Unlike problems with some other parts of our body, the vast majority of brain disorder presentations are not linked to an identifiable gene.But a University of California, San Diego study published in 2001 suggested a different path. What if it wasn’t a single faulty gene—or even a series of genes—that always caused cognitive issues? What if it could be the genetic differences between cells?The explanation had seemed far-fetched, but more researchers have begun to take it seriously. Scientists already knew that the 85 billion to 100 billion neurons in your brain work to some extent in concert—but what they want to know is whether there is a risk when some of those cells might be singing a different genetic tune. . —Roxanne Khamsi We can still have nice things A place for comfort, fun and distraction in these weird times. (Got any ideas? Drop me a line or .) + Has it really been 15 years since started doing cartwheels mid-performance on the Today show?+ I’m blessing your Tuesday with these adorable (meerkittens?)+ Japan’s are weird, wonderful, and everything inbetween.+ Instagram’s hottest faces wouldn’t be seen dead without a .+ A ? Perfection.

AI language models are not humans, and yet we evaluate them as if they were, using tests like the bar exam or the United States Medical Licensing Examination. The models tend to do really well in these exams, probably because examples of such exams are abundant in the models’ training data. Yet, as my colleague Will Douglas Heaven writes in , “some people are dazzled by what they see as glimmers of human-like intelligence; others aren’t convinced one bit.” A growing number of experts have called for these tests to be ditched, saying they boost AI hype and create “the illusion that [AI language models] have greater capabilities than what truly exists.” . What stood out to me in Will’s story is that we know remarkably little about how AI language models work and why they generate the things they do. With these tests, we’re trying to measure and glorify their “intelligence” based on their outputs, without fully understanding how they function under the hood. Other highlights: Our tendency to anthropomorphize makes this messy: “People have been giving human intelligence tests—IQ tests and so on—to machines since the very beginning of AI,” says Melanie Mitchell, an artificial-intelligence researcher at the Santa Fe Institute in New Mexico. “The issue throughout has been what it means when you test a machine like this. It doesn’t mean the same thing that it means for a human.” Kids vs GPT-3: Researchers at the University of California, Los Angeles gave GPT-3 a story about a magical genie transferring jewels between two bottles and then asked it how to transfer gumballs from one bowl to another, using objects such as a posterboard and a cardboard tube. The idea is that the story hints at ways to solve the problem. GPT-3 proposed elaborate but mechanically nonsensical solutions. “This is the sort of thing that children can easily solve,” says Taylor Webb, one of the researchers. AI language models are not humans: “With large language models producing text that seems so human-like, it is tempting to assume that human psychology tests will be useful for evaluating them. But that’s not true: human psychology tests rely on many assumptions that may not hold for large language models,” says Laura Weidinger, a senior research scientist at Google DeepMind. Lessons from the animal kingdom: Lucy Cheke, a psychologist at the University of Cambridge, UK, suggests AI researchers could adapt techniques used to study animals, which have been developed to avoid jumping to conclusions based on human bias. Nobody knows how language models work: “I think that the fundamental problem is that we keep focusing on test results rather than how you pass the tests,” says Tomer Ullman, a cognitive scientist at Harvard University. . Deeper Learning Google DeepMind has launched a watermarking tool for AI-generated images Google DeepMind has launched a new watermarking tool that labels whether images have been generated with AI. The tool, called SynthID, will initially be available only to users of Google’s AI image generator Imagen. Users will be able to generate images and then choose whether to add a watermark or not. The hope is that it could help people tell when AI-generated content is being passed off as real, or protect copyright. Baby steps: Google DeepMind is now the first Big Tech company to publicly launch such a tool, following a voluntary pledge with the White House to develop responsible AI. Watermarking—a technique where you hide a signal in a piece of text or an image to identify it as AI-generated—has become one of the most popular ideas proposed to curb such harms. It’s a good start, but watermarks alone won’t create more trust online. Bits and Bytes Chinese ChatGPT alternatives just got approved for the general publicBaidu, one of China’s leading artificial-intelligence companies, has announced it will open up access to its ChatGPT-like large language model, Ernie Bot, to the general public. Our reporter Zeyi Yang looks at what this means for Chinese internet users. () Brain implants helped create a digital avatar of a stroke survivor’s faceIncredible news. Two papers in Nature show major advancements in the effort to translate brain activity into speech. Researchers managed to help women who had lost their ability to speak communicate again with the help of a brain implant, AI algorithms and digital avatars. () Inside the AI porn marketplace where everything and everyone is for sale This was an excellent investigation looking at how the generative AI boom has created a seedy marketplace for deepfake porn. Completely predictable and frustrating how little we have done to prevent real-life harms like nonconsensual deepfake pornogrpahy. () An army of overseas workers in “digital sweatshops” power the AI boomMillions of people working in the Philippines work as data annotators for data company Scale AI. But as this investigation into the questionable labor conditions shows, many workers are earning below the minimum wage and have had payments delayed, reduced or canceled.() The tropical Island with the hot domain nameLol. The AI boom has meant Anguilla has hit the jackpot with its .ai domain name. The country is expected to make millions this year from companies wanting the buzzy domain name. () P.S. We’re hiring! MIT Technology Review is looking for an ambitious AI reporter to join our team with an emphasis on the intersection of hardware and AI. This position is based in Cambridge, Massachusetts. Sounds like you, or someone you know? .

For decades, MIT Technology Review has published annual lists highlighting the advances redefining and the pushing their fields forward. This year, we’re launching a new list, recognizing companies making progress on one of society’s most pressing challenges: climate change. MIT Technology Review’s 15 Climate Tech Companies to Watch will highlight startups and established businesses that our editors think could have the greatest potential to substantially reduce greenhouse-gas emissions or otherwise address the threats of global warming. Attendees of the upcoming will be the first to learn the names of the selected companies, and founders or executives from several will appear on stage at the event. The conference will be held at the MIT Media Lab on MIT’s campus in Cambridge, Massachusetts, on October 4-5. . MIT Technology Review’s climate team consulted dozens of industry experts, academic sources, and investors to come up with a long list of nominees, representing a broad array of climate technologies. From there, the editors worked to narrow down the list to 15 companies whose technical advances and track records in implementing solutions give them a real shot at reducing emissions or easing the harms climate change could cause. We do not profess to be soothsayers. Businesses fail for all sorts of reasons, and some of these may. But all of them are pursuing paths worth exploring as the world races to develop cleaner, better ways of generating energy, producing food, and moving things and people around the globe. We’re confident we’ve picked a list of companies that could really help to combat the rising dangers before us. We’re excited to share the winners on October 4. And we hope you can to be among the first to hear our selections and share your feedback.

This is today’s edition of , our weekday newsletter that provides a daily dose of what’s going on in the world of technology. How one elite university is approaching ChatGPT this school year For many people, the start of September marks the real beginning of the year. Back-to-school season always feels like a reset moment. However, the big topic this time around seems to be the same thing that defined the end of last year: ChatGPT and other large language models.Last winter and spring brought so many headlines about AI in the classroom, with some panicked schools going as far as to ban ChatGPT altogether. Now, with the summer months having offered a bit of time for reflection, some schools seem to be reconsidering their approach. Tate Ryan-Mosley, our senior tech policy reporter, spoke to the associate provost at Yale University to find out why the prestigious school never considered banning ChatGPT—and instead wants to work with it. . Tate’s story is from The Technocrat, her weekly newsletter covering tech policy and power. to receive it in your inbox every Friday. If you’re interested in reading more about AI’s effect on education, why not check out: + ChatGPT is going to change education, not destroy it. The narrative around cheating students doesn’t tell the whole story. Meet the teachers who think generative AI could actually make learning better. . + Read why a high school senior believes that for the better. + How AI is helping historians better understand our past. The historians of tomorrow are using computer science to analyze how people lived centuries ago. . The must-reads I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology. 1 The seemingly unstoppable rise of China’s EV makersThe country’s internet giants are becoming eclipsed by its ambitious car companies. ( $)+ Working out how to recycle those sizable batteries is still a struggle. ( $)+ The US secretary of commerce’s trip to China is seriously high stakes. ( $)+ China’s car companies are turning into tech companies. () 2 US intelligence is developing surveillance-equipped clothingSmart textiles, including underwear, could capture vast swathes of data for officials. ()+ Home Office officials in the UK lobbied in favor of facial recognition. () 3 India’s intense tech training schools are breeding toxic cultures But the scandal-stricken schools are still seen as the best path to a high-flying career. ( $) 4 Organizations are struggling to fight an influx of cyber crimeThere just aren’t enough skilled cyber security workers to defend against hackers. ( $) 5 Kiwi Farms just won’t dieDespite transgender activists’ efforts to keep its hateful campaigns offline. ( $) 6 The tricky ethics of CRISPRJust because we can edit genes, doesn’t mean we should. ( $)+ The creator of the CRISPR babies was released from a Chinese prison last year. () 7 This startup is training ultra-Orthodox Jews for hi-tech careersHaredi men are learning how to use computers and programming languages for the first time. () 8 Silicon Valley’s latest obsession? TestosteroneFounders are fixated on the hormone’s global decline—and worrying about their own levels. ( $) 9 We’re bidding a fond farewell to Netflix’s DVDs While demand for the physical discs has dwindled, die-hard devotees are devastated. ( $) 10 Concerts are different nowYou can thank TikTok for all those outlandish outfits. ()+ A Montana official is hell-bent on banning TikTok. ( $)+ TikTok’s hyper-realistic beauty filters are here to stay. () Quote of the day “I think they’re a generation ahead of us.” —Renault CEO Luca de Meo reflects on China’s electric vehicle makers’ stranglehold on the industry, reports. The big story What to expect when you’re expecting an extra X or Y chromosome August 2022 Sex chromosome variations, in which people have a surplus or missing X or Y, occur in as many as one in 400 births. Yet the majority of people affected don’t even know they have them, because these conditions can fly under the radar. As more expectant parents opt for noninvasive prenatal testing in hopes of ruling out serious conditions, many of them are surprised to discover instead that their fetus has a far less severe—but far less well-known—condition. And because so many sex chromosome variations have historically gone undiagnosed, many ob-gyns are not familiar with these conditions, leaving families to navigate the unexpected news on their own. . —Bonnie Rochman We can still have nice things A place for comfort, fun and distraction in these weird times. (Got any ideas? Drop me a line or .) + Some of the creative ideas registered by inventors in the UK last year are truly off the wall—a path that , anyone?+ Congratulations to Tami Manis, who has the honor of owning the for a woman.+ Grilling is a science as well as an art.+ This steel drum cover of 50 Cent’s is everything I hoped for.+ Take a sneaky peek at this year’s mesmerizing shortlist.

This is today’s edition of , our weekday newsletter that provides a daily dose of what’s going on in the world of technology. A biotech company says it put dopamine-making cells into people’s brains The news: In an important test for stem-cell medicine, biotech company BlueRock Therapeutics says implants of lab-made neurons introduced into the brains of 12 people with Parkinson’s disease appear to be safe and may have reduced symptoms for some of them. How it works: The new cells produce the neurotransmitter dopamine, a shortage of which is what produces the devastating symptoms of Parkinson’s, including problems moving. The replacement neurons were manufactured using powerful stem cells originally sourced from a human embryo created using an in vitro fertilization procedure. Why it matters: The small-scale trial is one of the largest and most costly tests yet of embryonic-stem-cell technology, the controversial and much-hyped approach of using stem cells taken from IVF embryos to produce replacement tissue and body parts. . —Antonio Regalado Here’s why I am coining the term “embryo tech” Antonio, our senior biomedicine editor, has been following experiments using embryonic stem cells for quite some time. He has coined the term “embryo tech” for the powerful technology researchers can extract by studying them, which includes new ways of reproducing through IVF—and could even hold clues to real rejuvenation science. To read more about embryo tech’s exciting potential, check out the latest edition of , our weekly biotech newsletter. to receive it in your inbox every Thursday. The must-reads I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology. 1 The US government has earmarked $12 billion to speed up the transition to EVsIt’ll incentivise existing automakers to refurbish their factories into EV production lines. ()+ Driving an EV is a real learning curve. ( $)+ Why getting more EVs on the road is all about charging. () 2 We still don’t know how effective geoengineering the climate could be And scientists are divided over whether it’s wasteful at best, dangerous at worst. ( $)+ A startup released particles into the atmosphere, in an effort to tweak the climate. () 3 Covid is on the rise againThe number of cases are creeping up around the world—but try not to panic. ( $)+ Covid hasn’t entirely gone away—here’s where we stand. () 4 Apple is dropping its iCloud photo-scanning toolThe controversial mechanism would create new opportunities for data thieves, the company has concluded. ( $) 5 India is launching a probe to study the sun Buoyed by the success of its recent lunar landing, the spacecraft is set to take off on Saturday. ( $)+ The lunar Chandrayaan-3 probe is capturing impressive new pictures. ()+ Scientists have solved a light-dimming space mystery. () 6 Generative AI is unlikely to interfere in major electionsWhile it has disruptive potential, panicking about it is unwarranted. ( $)+ Americans are worrying that AI could make their lives worse, however. ( $)+ Six ways that AI could change politics. () 7 We’ve never seen a year for hurricanes quite like thisThe combination of an El Niño year and extreme heat creates the perfect storm. ( $)+ Here’s what we know about hurricanes and climate change. () 8 An AI-powered drone beat champion human pilotsIt’s the first time an AI system has outperformed human pilots in a physical sport. ()+ New York police will use drones to surveil Labor Day parties. () 9 LinkedIn’s users are opening upAs other social media platforms falter, they’ve started oversharing on the professional network. ( $) 10 Brazil’s delivery workers are fighting back against rude customers By threatening to eat their food if they don’t comply. () Quote of the day “It could be a cliff we end up falling off, or a mountain we climb to discover a beautiful view.” —George Bamford, founder of luxury watch customizing company Bamford Watch Department, describes how he’s been dabbling with AI to visualize new timepieces to . The big story El Paso was “drought-proof.” Climate change is pushing its limits. December 2021 El Paso has long been a model for water conservation. It’s done all the right things—it’s launched programs to persuade residents to use less water and deployed technological systems, including desalination and wastewater recycling, to add to its water resources. A former president of the water utility once famously declared El Paso “drought-proof.” Now, though, even El Paso’s careful plans are being challenged by intense droughts. As the pressure ratchets up, El Paso, and places like it, force us to ask just how far adaptation can go. . —Casey Crownhart We can still have nice things A place for comfort, fun and distraction in these weird times. (Got any ideas? Drop me a line or .) + Wait a minute, that’s !+ A YouTube channel delving into is what the world needs right now.+ It’s time to plan an .+ David Tennant doing a dramatic reading of is a thing of beauty.+ How to make your own at home—it’s super quick.

This article first appeared in The Checkup, MIT Technology Review’s weekly biotech newsletter. To receive it in your inbox every Thursday, and read articles like this first, . This week, I published a story about the results of a study on Parkinson’s disease in which a biotech company transplanted dopamine-making neurons into people’s brains. (You can read the full story .) The reason I am following this experiment, and others like it, is that they are long-awaited tests of transplant tissue made from embryonic stem cells. Those are the sometimes controversial cells first plucked from human embryos left over from in vitro fertilization procedures 25 years ago. Their medical promise is they can turn into any other kind of cell. In some ways, stem cells are a huge disappointment. Despite their potential, scientists still haven’t crafted any approved medical treatment from them after all this time. The Parkinson’s study, run by the biotech company BlueRock, a division of Bayer, just passed phase 1, the earliest stage of safety testing. The researchers still don’t know whether the transplant works. I’m not sure how much money has been plowed into embryonic stem cells so far, but it’s definitely in the billions. And in many cases, the original proof of principle that cell transplants might work is actually decades old—like experiments from the 1990s showing that pancreas cells from cadavers, if transplanted, could treat diabetes. Cells derived from human cadavers, and sometimes from abortion tissue, make for an uneven product that’s hard to obtain. Today’s stem-cell companies aim instead to manufacture cells to precise specifications, increasing the chance they’ll succeed as real products. That actually isn’t so easy—and it’s a big part of the reason for the delay. “I can tell you why there’s nothing: it’s a manufacturing issue,” says Mark Kotter. He’s the founder of a startup company, Bit Bio, that is among those developing new ways to make stem cells do researchers’ bidding. While there aren’t any treatments built from embryonic stem cells yet, when I look around biology labs, these cells are everywhere. This summer, when I visited the busy cell culture room at the Whitehead Institute, on MIT’s campus, a postdoc named Julia Juong pulled out a plate of them and let me see their silvery outlines through a microscope. Juong, a promising young scientist, is also working on new ways to control embryonic stem cells. Incredibly, the cells I was looking at were descendants of the earliest supplies, dating back to 1998. One curious property of embryonic stem cells is that they are immortal; they keep dividing forever. “These are the originals,” Juong said. That reproducibility is part of why stem cells are technology, not just a science project. And what a cool technology it is. The internet has all the world’s information. A one-cell embryo has the information to make the whole human body. It’s what I have started to think of as “embryo tech.” I don’t mean what we do to embryos (like gene testing or even gene editing) but, instead, the powerful technology researchers can extract by studying them. Embryo tech includes stem cells and new ways of reproducing through IVF. It could even hold clues to real rejuvenation science. For instance, one lab in San Diego is using stem cells to grow brain organoids, a bundle of fetal-stage brain cells living in a petri dish. Scientists there plan to attach the organoid to a robot and learn to guide it through a maze. It sounds wild, but some researchers imagine that cell phones of the future could have biological components, even bits of brain, in them. Another recent example of embryo tech is in longevity science. Researchers now know how to turn any cell into a stem cell, by exposing it to what are called transcription factors. It means they don’t need embryos (with their ethical drawbacks) as the starting point. One hot idea in biotech is to give people controlled doses of these factors in order to actually rejuvenate body parts. Until recently, scientific dogma said human lives could only run in one direction: forward. But now the idea is to turn back the clock—by pushing your cells just a little way back in the direction of the embryo you once were. One company working on the idea is Turn Bio, which thinks it can inject the factors into people’s skin to get rid of wrinkles. Another company, , has raised $3 billion to pursue the deep scientific questions around this phenomenon. Finally, another cool discovery is that given the right cues, stem cells will try to self-organize into shapes that look like embryos. These entities, called synthetic embryos, or embryo models, are going to be useful in research, including studies aimed at developing new contraceptives. They are also a dazzling demonstration that any cell, even a bit of skin, may have the intrinsic capacity to create an entirely new person. All these, to my mind, are examples of embryo tech. But by its nature, this type of technology can shock our sensibilities. It’s the old story: reproduction is something secret, even divine. And toying with the spark of life in the lab—well, that’s playing at Frankenstein, isn’t it? When reporting about the Parkinson’s treatment, I learned that Bayer is still anxious about embryo tech. Those at the company have been tripping over themselves to avoid saying “embryo” at all. That’s because Germany has a very strict law that forbids destruction of embryos for research within its borders. So what will embryo tech lead to next? I’m going to be tracking the progress of human embryonic stem cells, and I am working on a few big stories from the frontiers that I hope will shock, awe, and inspire. So stay tuned to MIT Technology Review. Read more from MIT Technology Review’s archive Earlier this month, we published . While there are no treatments yet, the number of experiments on patients is growing. That has some researchers predicting that the technology could deliver soon. It’s about time! And check out the of our magazine, where we our on the topic, from way back in 1998. Stem cells come from embryos, but surprisingly, the reverse also seems to be the case: given a few nudges, these potent cells will spontaneously form structures that look, and act, a lot like real embryos. I first reported on the appearance of “” in 2017 and the topic has only heated up since, as we recounted this June in about the wild race to improve the technology. Stem cells aren’t the only approach to regrowing organs. In fact, some of our body parts have the ability to regenerate on their own. Jessica Hamzelou reported on a biotech company that’s trying to make inside people’s lymph nodes. From around the web The overdose reversal drug Narcan is going over-the-counter. A two-pack of the nasal spray will cost $49.99 and should be at US pharmacies next week. The move comes as overdoses from the opioid fentanyl spiral out of control. () If you’re having surgery, you’ll probably be looking for the best surgeon you can get. That might be a woman, according to a study finding that patients of female surgeons are a lot less likely to die in the months following an operation than those operated on by men. The reasons for the effect are unknown. () New weight-loss drugs don’t just cause people to shed pounds. One of them, Wegovy, could also protect against heart failure. . I stopped worrying about covid-19 after my second vaccine shot and never looked back. But a new variant has some people asking, “How bad could BA.2.86 get?” ()

In an important test for stem-cell medicine, a biotech company says implants of lab-made neurons introduced into the brains of 12 people with Parkinson’s disease appear to be safe and may have reduced symptoms for some of them. The added cells should produce the neurotransmitter dopamine, a shortage of which is what produces the devastating symptoms of Parkinson’s, including problems moving. “The goal is that they form synapses and talk to other cells as if they were from the same person,” says Claire Henchcliffe, a neurologist at the University of California, Irvine, who is one of the leaders of the study. “What’s so interesting is that you can deliver these cells and they can start talking to the host.” The study is one of the largest and most costly tests yet of embryonic-stem-cell technology, the controversial and much-hyped approach of using stem cells taken from IVF embryos to produce replacement tissue and body parts. The small-scale trial, whose main aim was to demonstrate the safety of the approach, was sponsored by BlueRock Therapeutics, a subsidiary of the drug giant Bayer. The replacement neurons were manufactured using powerful stem cells originally sourced from a human embryo created an in vitro fertilization procedure. According to data presented by Henchliffe and others on August 28 at the International Congress for Parkinson’s Disease and Movement Disorder in Copenhagen, there are also hints that the added cells had survived and were reducing patients’ symptoms a year after the treatment. These clues that the transplants helped came from brain scans that showed an increase in dopamine cells in the patients’ brains as well as a decrease in “off time,” or the number of hours per day the volunteers felt they were incapacitated by their symptoms. However, outside experts expressed caution in interpreting the findings, saying they seemed to show inconsistent effects—some of which might be due to the placebo effect, not the treatment. “It is encouraging that the trial has not led to any safety concerns and that there may be some benefits,” says Roger Barker, who studies Parkinson’s disease at the University of Cambridge. But Barker called the evidence the transplanted cells had survived “a bit disappointing.” Because researchers can’t see the cells directly once they are in a person’s head, they instead track their presence by giving people a radioactive precursor to dopamine and then watching its uptake in their brains in a PET scanner. To Barker, these results were not so strong and he says it’s “still a bit too early to know” whether the transplanted cells took hold and repaired the patients’ brains. Legal questions Embryonic stem cells were first isolated in 1998 at the University of Wisconsin from embryos made in fertility clinics. They are useful to scientists because they can be grown in the lab and, in theory, be coaxed to form any of the 200 or so cell types in the human body, prompting attempts to restore vision, cure diabetes, and reverse spinal cord injury. However, there is still no medical treatment based on embryonic stem cells, despite billions of dollars’ worth of research by governments and companies over two and a half decades. BlueRock’s study remains one of the key attempts to change that. And stem cells continue to raise delicate issues in Germany, where Bayer is headquartered. Under Germany’s Embryo Protection Act, one of the most restrictive such laws in the world, it’s still a crime, punishable with a prison sentence, to derive embryonic cells from an embryo. What is legal, in certain circumstances, is to use existing cell supplies from abroad, so long as they were created before 2007. Seth Ettenberg, the president and CEO of BlueRock, says the company is manufacturing neurons in the US and that to do so it employs embryonic stem cells from the original supplies in Wisconsin, which remain widely used. “All the operations of BlueRock respect the high ethical and legal standards of the German Embryo Protection Act, given that BlueRock is not conducting any activities with human embryos,” Nuria Aiguabella Font, a Bayer spokesperson, said in an email. Long history The idea of replacing dopamine-making cells to treat Parkinson’s dates to the 1980s, when doctors tried it with fetal neurons collected after abortions. Those studies proved equivocal. While some patients may have benefited, the experiments generated alarming headlines after others developed, like uncontrolled writhing and jerking. Using brain cells from fetuses wasn’t just ethically dubious to some. Researchers also became convinced such tissue was so variable and hard to obtain that it couldn’t become a standardized treatment. “There is a history of attempts to transplant cells or tissue fragments into brains,” says Henchcliffe. “None ever came to fruition, and I think in the past there was a lack of understanding of the mechanism of action, and a lack of sufficient cells of controlled quality.” Yet there was evidence transplanted cells could live. Post-mortem examinations of some patients who’d been treated with fetal cells showed that the transplants were still present many years later. “There are a whole bunch of people involved in those fetal-cell transplants. They always wanted to find out—if you did it right, would it work?” says Jeanne Loring, a cofounder of Aspen Neuroscience, a stem-cell company planning to launch its own tests for Parkinson’s disease. The discovery of embryonic stem cells is what made a more controlled test a possibility. These cells can be multiplied and turned into dopamine-making cells by the billions. The initial work to manufacture such dopamine cells, as well as tests on animals, was performed by Lorenz Studer at Columbia University. In 2016 he became a scientific founder of BlueRock, which was initially formed as a joint venture between Bayer and the investment company Versant Ventures “It’s one of the first times in the field when we have had such a well-understood and uniform product to work with,” says Henchcliffe, who was involved in the early efforts. In 2019, Bayer bought Versant in a deal valuing the stem-cell company at around $1 billion. Movement disorder In Parkinson’s disease, the cells that make dopamine die off, leading to shortages of the brain chemical. That can cause tremors, rigid limbs, and a general decrease in movement called bradykinesia. The disease is typically slow-moving, and a drug called levodopa can control the symptoms for years. A type of brain implant called a deep brain stimulator can also reduce symptoms. The disease is progressive, however, and eventually, levodopa can’t control the symptoms as well. BLUE ROCK THERAPEUTICS

This year, the actor Michael J. Fox confided to CNN that he retired from acting for good after he couldn’t remember his lines anymore, although that was 30 years after his diagnosis. “I’m not gonna lie. It’s getting harder,” Fox told the network. “Every day it’s tougher.” The promise of a cell therapy is that doctors wouldn’t just patch over symptoms but could actually replace the broken brain networks by adding new neurons. “The potential for regenerative medicine is not to just delay disease, but to rebuild brain functionality,” says Ettenberg, BlueRock’s CEO. “There is a day when we hope that people don’t think of themselves as Parkinson’s patients.” Ettenberg says BlueRock plans to launch a larger study next year, with more patients, in order to determine whether the treatment is working, and how well.

This article is from The Spark, MIT Technology Review’s weekly climate newsletter. To receive it in your inbox every Wednesday, . When I was growing up near the US Gulf Coast, it was more common for my school to get called off for a hurricane than for a snowstorm. So even though I live in the Northeast now, by the time late August rolls around I’m constantly on hurricane watch. And while the season has been relatively quiet so far, a storm named Idalia changed that, as a Category 3 hurricane. (Also, let’s not forget Hurricane Hilary, which in a rare turn of events .) Tracking these storms as they’ve approached the US, I decided to dig into the link between climate change and hurricanes. It’s fuzzier than you might think, as . But as I was reporting, I also learned that there are a ton of other factors affecting how much damage hurricanes do. So let’s dive into the good, the bad, and the complicated of hurricanes. The good The good news is that we’ve gotten a lot better at forecasting hurricanes and warning people about them, says Kerry Emanuel, a hurricane expert and professor emeritus at MIT. I wrote about this a couple of years ago in being adopted by the National Weather Service in the US. In the US, average errors in predicting hurricane paths dropped from about 100 miles in 2005 to 65 miles in 2020. Predicting the intensity of storms can be tougher, but two new supercomputers, which the agency received in 2021, could help those forecasts continue to improve too. The computers Supercomputers aren’t the only tool forecasters are using to improve their models, though—some researchers are hoping that AI could speed up weather forecasting, Forecasting needs to be paired with effective communication to get people out of harm’s way by the time a storm hits—and many countries are improving their disaster communication methods. Bangladesh is one of the world’s most disaster-prone countries, but the death toll from extreme weather has dropped quickly thanks to the The bad The bad news is that there are more people and more stuff in the storms’ way than there used to be, because people are flocking to the coast, says , a hurricane researcher and forecaster at Colorado State University. The population along Florida’s coastline , outpacing the growth nationally by a significant margin. That trend holds nationally: population growth in coastal counties in the US is happening at a quicker clip than in other parts of the country. Several insurance companies have already stopped doing business in Florida because of increasing risks, and this year’s hurricane season could affect And the expected damage from disasters affects different groups in different ways. Across the US, white people and those with more wealth are more likely to get federal aid after disasters than others, according to an The complicated Climate change is loading the dice on most extreme weather phenomena. But what specific links can we make to hurricanes? A few effects are pretty well documented both in historical data and in climate models. One of the clearest impacts of climate change is rising temperatures. Warmer water can transfer more energy into hurricanes, so as global ocean temperatures hit new heights, hurricanes are more likely to become major storms. Warmer air can hold more moisture (think about how humid the air can feel on a hot day, compared with a cool one.) Warmer, wetter air means more rainfall during hurricanes—and flooding is one of the deadliest aspects of the storms. And rising sea levels are making storm surges more severe and coastal flooding more common and dangerous. But there are other effects that aren’t as clear, and questions that are totally open. Most striking to me is that researchers are in total disagreement about how climate change will affect the number of storms that form each year. For more on what we know (and what we don’t know) about climate change and hurricanes, check out . Stay safe out there! Related reading Forecasting is a difficult task, but supercomputers and AI are both helping scientists better predict weather of all types. Check out , and from earlier this summer. Flooding is the deadliest part of hurricanes, and cities aren’t prepared to handle it, . New York City put in a lot of coastal flood defenses after Hurricane Sandy in 2012. Then , as I covered after the storm. Millions lost power after Hurricane Ida. My colleague James Temple Keeping up with climate This has been a summer of extreme weather, from heat waves to wildfires to flooding. Here are 10 data visualizations to sum up a brutal season. () A new battery manufacturing facility from Form Energy is being built on the site of an old steel mill in West Virginia. The factory could help revitalize the region’s flagging economy. () EV charging in the US is getting complicated. Here’s a great explainer that untangles all the different plugs and cables you need to know about. () → Things are changing because many automakers are switching over to Tesla’s charging standard. () The first offshore wind auction in the Gulf of Mexico fell pretty flat, with two of three sites getting no bids at all. The lackluster results reveal the challenges facing offshore wind, especially in Texas. () A Chinese oil giant is predicting that gasoline demand in the country will peak this year, earlier than previously expected. Electric vehicles are behind diminishing demand for gas. () → The “inevitable EV” was one of our picks for the 10 Breakthrough Technologies of 2023. () Vermont’s leading subsidy program for small battery installations is getting bigger. ()

On Wednesday, Baidu, one of China’s leading artificial-intelligence companies, announced it would open up access to its ChatGPT-like large language model, Ernie Bot, to the general public. It’s been a long time coming. , Ernie Bot was the first Chinese ChatGPT rival. Since then, many Chinese tech companies, including Alibaba and ByteDance, have followed suit and released their own models. Yet all of them forced users to sit on waitlists or go through approval systems, making the products mostly inaccessible for ordinary users—a possible result, people suspected, of limits put in place by the Chinese state. On August 30, Baidu posted on social media that it will also release a batch of new AI applications within the Ernie Bot as the company rolls out open registration the following day. Quoting an anonymous source, that regulatory approval will be given to “a handful of firms including fledgling players and major technology names.” , a Chinese publication, reported that eight Chinese generative AI chatbots have been included in the first batch of services approved for public release. ByteDance, which released the chatbot Doubao on August 18, and the Institute of Automation at the Chinese Academy of Sciences, which released Zidong Taichu 2.0 in June, are reportedly also reportedly included in the first batch. Other models from Alibaba, iFLYTEK, JD, and 360 are not. When Ernie Bot was released on March 16, the response was a mix of excitement and disappointment. Many people deemed its performance mediocre relative to the previously released ChatGPT. But most people simply weren’t able to see it for themselves. The launch event didn’t feature a live demonstration, and later, to actually try out the bot, Chinese users need to have a Baidu account and apply for a use license that could take as long as three months to come through. Because of this, some people who got access early were selling secondhand Baidu accounts on e-commerce sites, charging anywhere from a few bucks to over $100. More than a dozen Chinese generative AI chatbots were released after Ernie Bot. They are all pretty similar to their Western counterparts in that they are capable of conversing in text—answering questions, solving math problems (), writing programming code, and composing poems. Some of them also allow input and output in other forms, like audio, images, data visualization, or radio signals. Like Ernie Bot, these services came with restrictions for user access, making it difficult for the general public in China to experience them. Some were allowed only for business uses. One of the main reasons Chinese tech companies limited access to the general public was concern that the models could be used to generate politically sensitive information. While the Chinese government has shown it’s extremely capable of censoring social media content, new technologies like generative AI could push the censorship machine to unknown and unpredictable levels. Most current chatbots like those from Baidu and ByteDance that would refuse to answer sensitive questions about Taiwan or Chinese president Xi Jinping, but a general release to China’s 1.4 billion people would almost certainly allow users to find more clever ways to circumvent censors. When China released its first regulation specifically targeting generative AI services in July, it included a line requesting that companies obtain “relevant administrative licenses,” though at the time the law didn’t specify what licenses it meant. As , the approval Baidu obtained this week was issued by the Chinese Cyberspace Administration, the country’s main internet regulator, and it will allow companies to roll out their ChatGPT-style services to the whole country. But the agency has not officially announced which companies obtained the public access license or which ones have applied for it. Even with the new access, it’s unclear how many people will use the products. The initial lack of access to Chinese chatbot alternatives decreased public interest in them. While ChatGPT has not been officially released in China, many Chinese people are able to access the OpenAI chatbot by using VPN software. “Making Ernie Bot available to hundreds of millions of Internet users, Baidu will collect massive valuable real-world human feedback. This will not only help improve Baidu’s foundation model but also iterate Ernie Bot on a much faster pace, ultimately leading to a superior user experience,” said Robin Li, Baidu’s CEO, according to a press release from the company. Baidu declined to give further comment. ByteDance did not immediately respond to a request for comment from MIT Technology Review.